Im November 2007 hat Cosina/Voigtländer zwei neue Objektive für Nikon- and Pentax-Systeme vorgestellt:

Ultron 40mm F2 SL II und Nokton 58mm F1.4 SL II.

In November 2007, Cosina/Voigtländer announced the Ultron 40mm F2 SL II and

the Nokton 58mm F1.4 SL II for Nikon and Pentax systems.

Dieser Kurzbericht ist für das neue Nokton 58mm F1.4 SL II für Nikon.

This overview is for the new for Nokton 58mm F1.4 SL II Nikon.

Das Objektiv ist ganz aus Metall und macht einen sehr stabilen Eindruck.

The lens is all metal with manual focus and feels very solid.

Das Nokton-Objektiv verfügt über eine CPU und kann damit Belichtungsinformationen bereitstellen.

Der Blendenring muß auf f/16 gestellt werden (in orange).

The Nokton is a CPU lens and meters on all Nikon DSLR.

The aperture ring needs to be set to f/16 (orange).

Beispielbilder mit f/1.4:

Samples shot with f/1.4:

Die Tiefenschärfe ist bei f/1.4 sehr gering und die richtige Entfernung ist nicht immer einfach einzustellen.

Als Einstellungshilfe kann der grüne Punkt in der Anzeige verwendet werden, der angezeigt wird, wenn

das Objekt in der Mitte scharf dargestellt wird (AF-Mode ist für Einfachfeld [.]).

The depth of field is of course very shallow at f/1.4 and the focus can be difficult to set.

In the viewfinder, a green dot is displayed for subjects in focus in the center frame

(The AF mode is set to Single Area [.]).

f/1.6 1/40s (aus der Hand ohne Stativ)

f/1.6 1/40s (handheld)

1:1 Ausschnitt der unteren linken Ecke

1:1 crop lower-left corner

f/1.4 1/10s (Stativ)

f/1.4 1/10s (tripod)

1:1 Ausschnitt

1:1 crop

f/2.0 1/60s

1:1 Ausschnitt

1:1 crop

f/3.2 1/125s

1:1 Ausschnitt

1:1 crop

f/2.5 1/100s

f/2.5 1/80s

f/3.5 1/200s

f/2.8 1/125s

f/8 1/800s

1:1 Ausschnitt

1:1 crop

f/7.1 1/800s

1:1 Ausschnitt

1:1 crop

f/8 1/1000s

1:1 Ausschnitt

1:1 crop

Erstes Panoramabild mit dem Nokton-Objektiv:

Unter Verwendung der Pano-Tools wurde der 'b'-Parameter, der zum Ausgleich der Tonnenverzerrung

verwendet wird, auf einen sehr geringen Wert von -0,001406 optimiert.

First panoramic image using the Nokton:

Using the pano tools, the 'b' parameter used for the barrel distortion was optimized to a very

low value of -0,001406.

f/6.3 1/640s

Nachtaufnahmen mit dem Entfernungsring auf Anschlag unendlich:

Night exposures with the distance ring at infinity:

f/1.4 13s

f/1.4 13s

f/2 6s

f/2.8 10s

A .NET nullable type is a data type accepting a null value.

So how is it different from assigning a null value in C or C++?

In C/C++ a null is a 0.

This is how the null is defined in C/C++:

#define NULL 0

The define statement is a text processor directive to use the new defined value in the pre-parsing stage on the source code before the compiler sees it.

BTW: Values like true or false are also int:

#define FALSE 0

#define TRUE 1

But in .NET a null and a 0 are two different values.

In C#, basic data types like int or bool are not nullable types by default.

Promoted to a nullable type adds the null. Take for example a bool: The bool type is an enumeration of the two values true and false. A nullable bool adds a null to the set increasing the cardinality to 3.

Useful? Yes, making them a perfect data type to handle the undefined state besides the boolean result.

Nullable<bool> flag = null;

Or using the short form (T? = nullable):

bool? flag = null;

In Windows Powershell:

[nullable[bool]]$flag = $null

Note: extending the value set can change semantics (the meaning) with existing syntax (the form).

The following is not valid anymore because you cannot cast a nullable bool to a bool to evaluate the expression.

Is flag null or false?

if(!flag)

{

Console.WriteLine("false");

}

You need to be specific in the evaluation because squishing a set of 3 values to a set of 2 values in not possible without data loss.

if(flag == false)

{

Console.WriteLine("false");

}

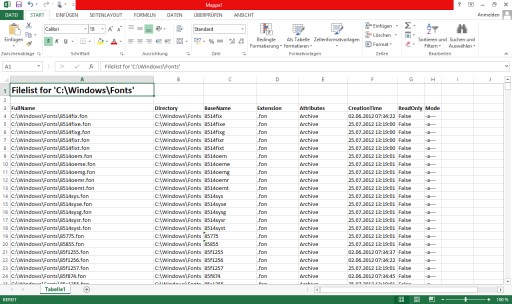

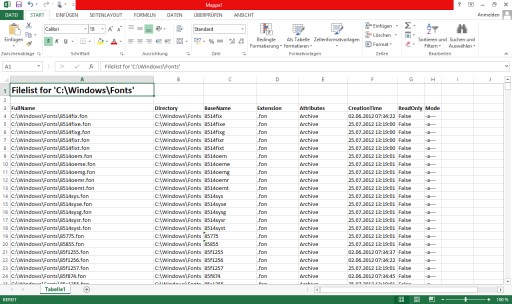

Windows Powershell has several cmdlets to output data to different formats. CSV, XML, HTML and JSON for example, but Excel is missing. And this is for a good reason: an Excel document is specific to the problem and not to the data. CSV is aligning the data in text rows, XML/HTML packs data in a tag structure and JSON uses its own text structure to serialize, but no one stores raw data in the Excel format for itself.

If you like to use Excel with your data and you don't need any additional formatting, go with the fast CSV. But if you like to create a native Excel document with a customized data presentation, you can use ConvertTo-XLSX.

The following picture show how ConvertTo-XLSX outputs the data. The data might reside on different sheets or position and might be transformed using the different Excel graphical functions. This code is about fast automation and use only little of what Excel has to offer to format your data.

The fast automation is achieved by using an Excel Range and select all data in one automation step. The header line is still done using individual cell automation to allow customizable appearance like bold or colored entries.

Once all data is collected, the data is transferred to a matrix. Then the data is assigned to an Excel Range using ToExcelColumn to compose the area (A1 notation).

Function ToExcelColumn([int]$col)

{

# Author: Jürgen Eidt, Dec 2012

# to Excel column name conversion

[string]$base26 = ""

# Convert to modified base26

while ($col -gt 0)

{

[int]$r = ($col - 1) % 26

$base26 = [char]([int][char]'A' + $r) + $base26

$col = ($col - $r) / 26

}

$base26

}

The ConvertTo-XLSX function accepts pipe input and optional parameters for Title and FilePath to store the Excel file.

Note:

In this example, one specific Excel version is used:

$xl = New-Object -ComObject "Excel.Application.15"

For general purpose, use:

$xl = New-Object -ComObject "Excel.Application"

Function ConvertTo-XLSX

{

# Author: Jürgen Eidt, Dec 2012

param(

[Parameter(Mandatory, ValueFromPipeline)]

$InputObject,

[Parameter()]

[string]$Title,

[Parameter()]

[string]$FilePath

)

begin

{

$objectsToProcess = @()

}

process

{

$objectsToProcess += $inputObject

}

end

{

# create the Excel object

$xl = New-Object -ComObject "Excel.Application.15"

$wb = $xl.Workbooks.Add()

$ws = $wb.ActiveSheet

$cells = $ws.Cells

[int]$_row = 1

[int]$_col = 1

# add optional title

if($title -ne "")

{

$cells.item($_row,$_col) = $Title

$cells.item($_row,$_col).font.bold = $true

$cells.item($_row,$_col).font.size = 18

$_row += 2

}

if($objectsToProcess.Count -gt 0)

{

$Columns = $_col

# add column headings in bold

foreach($property in $objectsToProcess[0].PSobject.Properties)

{

($cell = $cells.item($_row,$_col)).Value2 = $property.Name

$cell.font.bold = $true

$_col++

}

[int]$Rows = $objectsToProcess.Count

[int]$Columns = $_col - $Columns

# create the data matrix that is later copied to the Excel range

$XLdataMatrix = New-Object 'string[,]' ($Rows),($Columns)

$z = 0

# add the data

foreach($data in $objectsToProcess)

{

$s = 0

# write each property data to the matrix

foreach($property in $data.PSobject.Properties)

{

if($s -ge $Columns)

{

break

}

if($property.Value -ne $null)

{

$XLdataMatrix[$z,$s] = $property.Value.ToString()

}

$s++

}

$z++

}

$_row++

$_col = 1

# create the range argument for the matching data matrix, for example: "A1:K100"

$rangeArg = "{0}{1}:{2}{3}" -f (ToExcelColumn $_col), $_row, (ToExcelColumn $Columns), ($_row+$Rows-1)

# copy the data matrix to the range

$ws.Range($rangeArg).Value2 = $XLdataMatrix

}

[void]$ws.Columns.AutoFit()

[void]$ws.Rows.AutoFit()

# show the new created Excel sheet

$xl.Visible = $true

if($FilePath)

{

$wb.SaveAs($FilePath)

}

# release Excel object, see http://technet.microsoft.com/en-us/library/ff730962.aspx

$xl.Quit()

[void][System.Runtime.Interopservices.Marshal]::ReleaseComObject($xl)

Remove-Variable xl

}

}

Use the function like all other convertTo functions to convert the data presentation. You might want to fiilter the data first for specific properties to be used.

$path = "C:\Windows\Fonts"

Get-ChildItem -Path $path -File -Recurse | ConvertTo-XLSX -Title "Filelist for '$path'"

To use a fast Excel automation, you assign the data matrix to an Excel Range, but ranges in Excel use letters for the column.

Columns start with A to Z, then continues with AA until ZZ and AAA from there all the way to the system limit.

So how to convert the column index to the Excel Column naming?

It is not a base 26 numbering schema: The ones-digit has a value range from 0..25 and all higher order digits have a range from 1..26.

Bijective base 26 on http://en.wikipedia.org/wiki/Hexavigesimal talks about this, but has a broken algorithm. AAA is not 677 but 703 (corrected for one-based numbering). The implementation skips one letter in the higher order digits.

The correct numbering is:

1=A

:

26=Z

27=AA

:

702=ZZ

703=AAA

:

This text file contain all columns up to 20000:ACOF (and this ZIP from 1..AAAAA).

Combined with the fact that Excel indices start from 1 instead of 0, I developed the following solution (implemented in Windows Powershell):

Function ToExcelColumn([int]$col)

{

# Author: Jürgen Eidt, Dec 2012

# to Excel column name conversion

[string]$base26 = ""

# Convert to modified base26

while ($col -gt 0)

{

[int]$r = ($col - 1) % 26

$base26 = [char]([int][char]'A' + $r) + $base26

$col = ($col - $r) / 26

}

$base26

}

For completeness: The reverse operation (from column representation to the actual int value):

Function ExcelColumnToInt([string]$A1)

{

# Author: Jürgen Eidt, Dec 2012

# Excel column name conversion

[int]$col = 0

[char[]]$A1.ToUpperInvariant() | % { $col = 26 * $col + [int]$_ + 1 - [int][char]'A' }

$col

}

Vegleichsbilder mit DX-Objektiv an FX-Format.

Die automatische Erkennung für DX-Objektive wurde ausgeschaltet und die Bildgröße auf FX umgestellt.

Nikkor 18-70mm @ 18mm, f/7.1

Nikkor 18-70mm @ 18mm, f/7.1 mit Gegenlichtblende HB-32

Nikkor 18-70mm @ 50mm, f/7.1

Nikkor 18-70mm @ 70mm, f/7.1

Sigma 30mm, f/7.1

Sigma 50-150mm @ 50mm, f/8.0

Sigma 50-150mm @ 150mm, f/8.0